Language

Python

Tool Type

Algorithm

License

The MIT License

Version

1.0.0

General Directorate of Institutional Quality and Open Government, Government of the City of Buenos Aires

Dataset quality analysis is a tool used to validate the data structure in the data sets added to the Buenos Aires open data portal. This tool ensures the consistency and accuracy of information, facilitating data transfer between agencies and supporting the education and transfer of skills necessary for the sustainability of open data portals. It provides a crucial mechanism for maintaining data quality and reliability.

Dataset quality analysis addresses the challenge of maintaining data quality and consistency in open portals, ensuring that information is accurate and reliable for use by government agencies and the public.

Automated Data Collection: Web-Based Data Collection: The tool can automatically gather data from various online sources, reducing the need for manual downloads. FTP Data Integration: Seamlessly integrates with FTP servers to fetch essential datasets, simplifying data acquisition from legacy systems or partners. Data Quality Assurance: Customizable Data Cleaning: Ensures that the data meets quality standards by applying specific cleaning rules tailored to each dataset, thus maintaining data integrity. Dynamic Data Handling: Adapts to different data structures, making it versatile for handling diverse datasets without constant code adjustments. Insightful Data Exploration: Geographical Data Insights: Analyzes and visualizes geographical data, providing spatial insights crucial for sectors like urban planning, logistics, or environmental monitoring. Interactive Data Analysis: Through Jupyter notebooks, stakeholders can interactively explore data, aiding in hypothesis testing and decision-making. Configurable Operations: Dataset Management: Uses a centralized list to manage and prioritize which datasets the tool handles, ensuring that only relevant data is processed. User-Driven Operations: Allows users to specify tasks, such as downloading or cleaning, using simple command-line arguments, offering flexibility in operations. Transparency & Monitoring: Activity Logging: Keeps track of all operations, ensuring transparency in data processing and aiding in troubleshooting or audits. Scalability & Integration: Modular Design: The tool's modular architecture ensures it can be expanded or integrated with other systems.

Built with Python 3.6.3, it leverages libraries like Scrapy for web scraping and pandas for data analysis. Handles data in CSV, JSON, and geospatial formats, ensuring interoperability. Utilizes geospatial libraries like Fiona and geopandas for geographic data. Configures and adjusts through manifest.json, guiding data download and cleaning. Adopts a modular approach to cleaning, with rules defined in JSON. Facilitates data retrieval from FTP servers, integrating dynamic database structures.

Connect with the Development Code team and discover how our carefully curated open source tools can support your institution in Latin America and the Caribbean. Contact us to explore solutions, resolve implementation issues, share reuse successes or present a new tool. Write to [email protected]

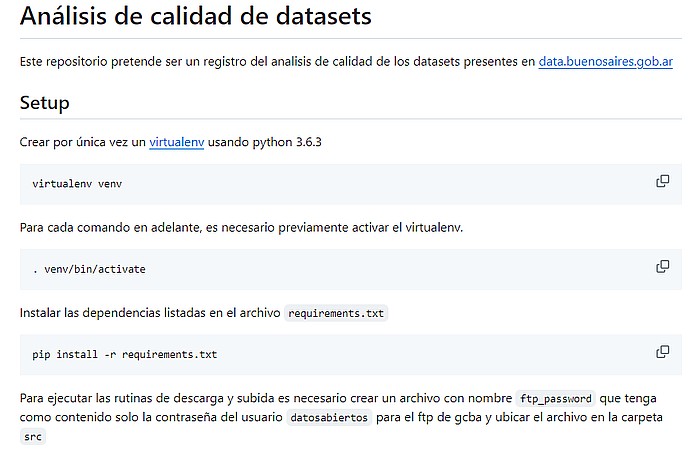

This image is a screenshot of documentation for a dataset quality analysis repository, including setup instructions and Python virtual environment activation steps.

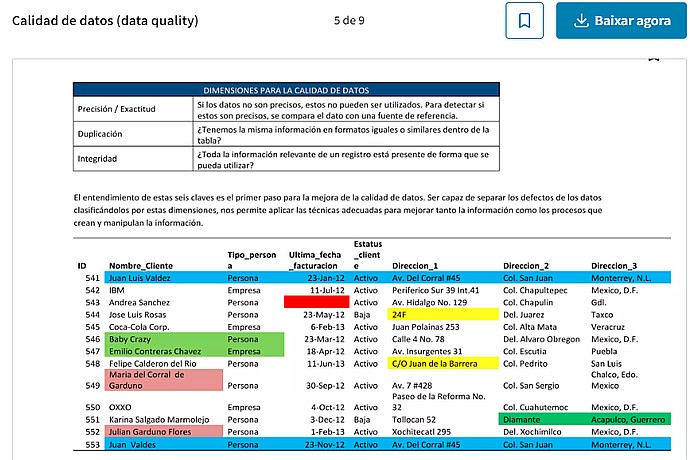

This image shows a screenshot of a data quality document in Spanish, detailing dimensions of data accuracy, duplication, and integrity, alongside a sample dataset table.

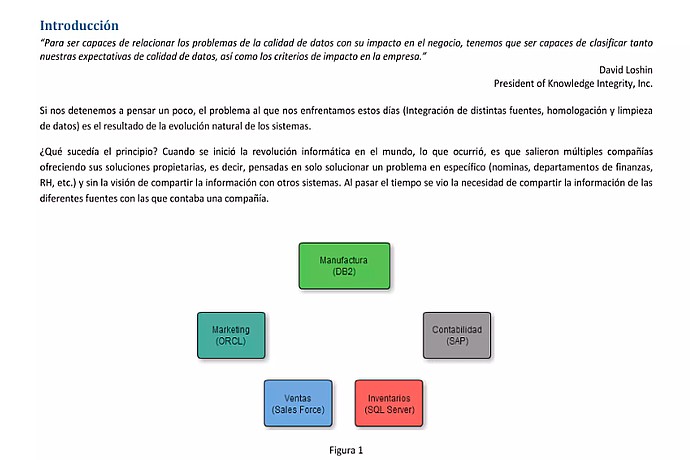

This image shows a text excerpt about data quality issues and a diagram (Figure 1) illustrating the integration of different business areas like manufacturing, marketing, and accounting.

Official publication and quality guidelines.

Success story thanks to implementation of improvements.